As a website owner, you may have come across the term “robots.txt” and wondered what it is all about. In simple terms, robots.txt is a text file that instructs search engine crawlers on which pages of your website they should or should not index. It serves as a way to communicate with search engines and control how they access your website’s content.

While it may seem like a small and insignificant file, having a well-optimized robots.txt can have a significant impact on your website’s search engine rankings and overall user experience. In this comprehensive guide, we will delve deeper into the world of robots.txt, its importance, and how you can use it to optimize your website for both search engines and users.

Overview of robots.txt

Definition and purpose

Robots.txt, also known as the robot’s exclusion protocol, is a plain text file that is placed in the root directory of a website. It was first implemented in 1994 by Martijn Koster, a Dutch computer scientist, to give website owners a way to control how search engine crawlers access and index their website’s content.

Robots.txt, also referred to as the robot’s exclusion protocol, is a text file placed in the main directory of a website

In simple terms, robots.txt is like a “do not disturb” sign for search engine crawlers. It tells them which pages they are allowed to visit and which ones they should avoid. This file is essential for websites that do not want certain pages or sections of their website to be indexed by search engines.

How it works

When a search engine crawler visits a website, the first thing it looks for is the robots.txt file. If it finds one, it reads the directives in the file and follows them accordingly. These directives act as instructions for the crawler on which pages to crawl and which ones to skip.

If there is no robots.txt file present, the search engine crawler will assume that it has permission to crawl and index all the pages on the website. This can lead to unnecessary crawling and indexing of pages that you may not want to show up in search engine results pages (SERPs).

Creating a robots.txt file

Creating a robots.txt file is easy, but it is crucial to get it right to avoid any issues with your website’s indexing and ranking. Here’s a step-by-step guide on how to create a robots.txt file for your website:

- Open a text editor: The first step is to open a plain text editor such as Notepad or TextEdit. Avoid using word processors like Microsoft Word, as they may add additional formatting to the file, making it unreadable by search engine crawlers.

- Add the necessary directives: The next step is to add the necessary directives to your robots.txt file. We will discuss these directives in detail in the next section, but some basic ones include User-agent, Disallow, and Allow.

- Save the file as “robots.txt”: Once you have added all the necessary directives, save the file as “robots.txt” and make sure to select “all files” in the “save as” dialog box.

- Upload the file to your website’s root directory: Once you have saved the file, upload it to your website’s root directory using an FTP client or through your web hosting control panel.

Proper format and syntax

To ensure that search engine crawlers can understand and follow the directives in your robots.txt file, it is crucial to use the correct format and syntax. The following are some basic rules to keep in mind when creating a robots.txt file:

- Begin with specifying the user-agent, which is the name of the search engine crawler you want to give instructions to. This can be Googlebot, Bingbot, Yandexbot, etc.

- Use a forward slash (/) to specify the root directory of your website, followed by the specific page or folder you want to give instructions for.

- Use “Disallow:” to instruct search engine crawlers not to crawl a particular page or folder.

- Use “Allow:” to instruct search engine crawlers to crawl a particular page or folder.

- Use the asterisk () character as a wildcard. For example, “Disallow: /images/” will disallow crawling of all pages within the “images” folder.

- Use a hashtag () for comments. Any line beginning with a hashtag will be ignored by search engine crawlers.

- Use a new line for each directive for better readability.

Tools and resources for creating one

Creating a robots.txt file from scratch can be a daunting task, especially if you are not familiar with the proper format and syntax. Thankfully, there are many tools and resources available online that can help you create a robots.txt file with ease.

Some popular tools include

- Google’s official robots.txt testing tool: This tool allows you to test your robots.txt file on your website and see how it will affect your website’s indexing and crawling.

- Robots.txt Generator by SEOBook: This tool allows you to generate a robots.txt file by simply entering the pages you want to block or allow.

- Yoast SEO plugin for WordPress: If you are using WordPress, you can use the Yoast SEO plugin to easily create and manage your robots.txt file.

Understanding directives in robots.txt

Directives are the key components of a robots.txt file. They tell search engine crawlers what actions to take when crawling your website. Let’s take a look at the three main directives used in robots.txt and how they work.

User-agent directive

The “User-agent” directive specifies the name of the search engine crawler that the following directives apply to. For example, if you want to give instructions to Googlebot, you would specify “User-agent: Googlebot”.

You can also use the wildcard () character to specify all user-agents. For example, “User-agent: ” applies to all search engine crawlers.

Disallow directive

The “Disallow” directive tells search engine crawlers which pages or folders they should not crawl or index. For example, if you don’t want search engine crawlers to access any page within a particular folder, you would use “Disallow: /foldername/”.

You can also use the wildcard () character to disallow access to all pages or folders within a specific directory. For example, “Disallow: /images/” will disallow access to all pages within the “images” folder.

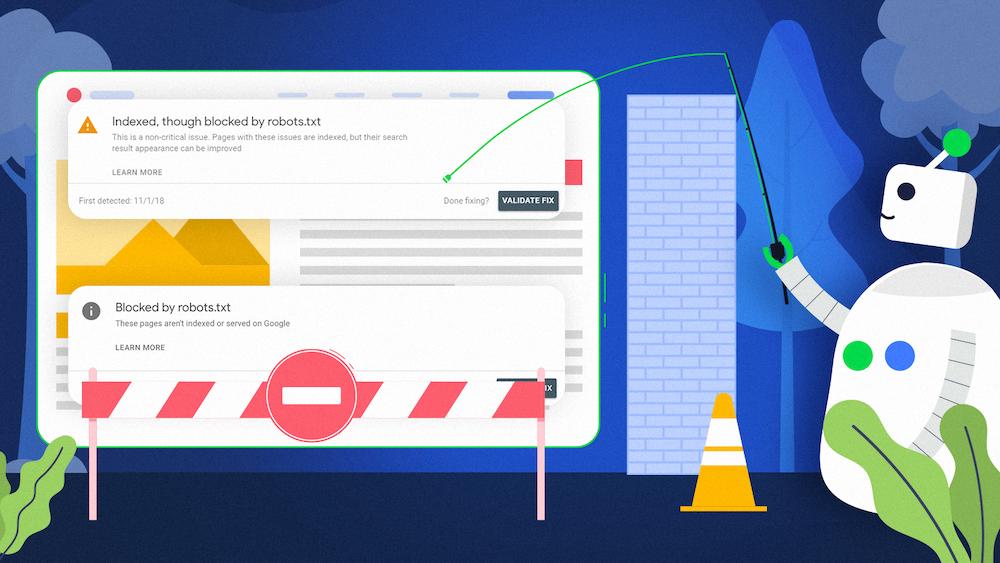

It is important to note that disallowing a page or folder in robots.txt does not guarantee that it will not be indexed. Search engine crawlers may still find and index these pages through other means, such as incoming links.

Allow directive

The “Allow” directive instructs search engine crawlers to access and index a specific page or folder. This directive is commonly used when you have disallowed access to a particular directory, but want to allow access to a specific page within that directory.

For example, if you have disallowed access to the “images” folder, but want to allow access to a specific image within that folder, you would use the “Allow” directive. It works in the same way as the “Disallow” directive, using the forward slash (/) and wildcard (*) characters.

Common mistakes to avoid in robots.txt

While robots.txt can be an effective tool for controlling how search engine crawlers access your website’s content, it is essential to avoid some common mistakes that can have adverse effects on your website’s indexing and crawling. Here are some mistakes to avoid when creating and managing your robots.txt file:

A frequent error made by website owners is unintentionally restricting access to critical pages or directories in their robots.txt file

Blocking important pages

One of the most common mistakes website owners make is accidentally blocking important pages or directories in their robots.txt file. This can happen if you are not familiar with the proper syntax or if there are any typos in your directives.

For example, if you accidentally use “Disalllow” instead of “Disallow”, search engine crawlers will ignore that directive and assume they have permission to crawl the page. This can result in sensitive information being indexed by search engines, which can be detrimental to your website’s security.

To avoid this mistake, always double-check your directives and test your robots.txt file regularly using tools like Google’s official robots.txt testing tool.

Using incorrect syntax

As mentioned earlier, using incorrect syntax in your robots.txt file can render it unreadable by search engine crawlers. One of the most common syntax errors is not using a forward slash (/) at the end of each directive.

For example, “Disallow: /foldername” will block all pages within the “foldername” directory, but “Disallow: /foldername/” will block the entire directory.

It is crucial to follow the correct syntax to ensure that your directives are read and followed by search engine crawlers.

Not updating when necessary

Your website’s content and structure may change over time, which means that your robots.txt file may need to be updated accordingly. For example, if you have added new pages or restructured your website’s navigation, you may need to add new directives to your robots.txt file.

It is essential to regularly review and update your robots.txt file to ensure that it reflects the current state of your website.

Best practices for optimizing robots.txt

Now that we have covered the basics of robots.txt and how to avoid common mistakes, let’s take a look at some best practices for optimizing your robots.txt file.

Keep it simple and organized

The first rule of creating an effective robots.txt file is to keep it simple and organized. Overcomplicating your directives and adding unnecessary lines can lead to confusion and mistakes.

Always use proper indentation and leave blank lines to make your robots.txt file more readable. This will also make it easier to find and fix any errors in your directives.

Test and monitor regularly

As mentioned earlier, it is crucial to test your robots.txt file using tools like Google’s official testing tool. This will help you identify any errors or issues with your directives and fix them before they cause any problems.

It is also recommended to regularly monitor your website’s crawling and indexing using tools like Google Search Console. This will give you insights into how search engine crawlers are accessing your website and if there are any issues with your robots.txt file.

Use it as a part of your SEO strategy

While robots.txt primarily serves as a way to control how search engine crawlers access your website, it can also be used as a part of your SEO strategy. By properly disallowing or allowing pages, you can direct search engine crawlers to focus on the most important pages on your website.

For example, if there are certain pages on your website that you do not want to rank in search results, you can use the “Disallow” directive to prevent them from being indexed. This will help search engines prioritize other pages that you want to rank higher.

The future of robots.txt

In July 2019, Google announced a new standard for robots.txt that aims to simplify how website owners control the crawling and indexing of their websites. The new standard, called the Robots Exclusion Protocol (REP), introduces two major changes:

Website owners must remain vigilant about such modifications and promptly update their robots.txt files as needed

- Support for all search engine crawlers: Previously, different search engine crawlers had different interpretations of robots.txt directives, which often led to confusion and mistakes. With the new standard, all search engine crawlers will follow the same rules, making it easier for website owners to manage their robots.txt files.

- Introduction of the “allow:” directive: The new standard adds support for the “allow:” directive, which allows website owners to specify which pages or directories they want to allow access to. This is useful for websites with large numbers of pages that need to be disallowed but have a few important pages that need to be crawled.

These changes are expected to come into effect on September 1, 2019, and are likely to bring significant changes to how robots.txt is used and managed. It is crucial for website owners to keep an eye out for these changes and make necessary updates to their robots.txt files.

Conclusion

Robots.txt may seem like a small and insignificant file, but it plays a critical role in how search engine crawlers’ access and index your website’s content. By understanding its functionality and using best practices for creating and managing it, you can ensure that your website is properly crawled and indexed by search engines.

Remember to regularly monitor and test your robots.txt file to avoid any issues or mistakes that can impact your website’s SEO and user experience. With the upcoming changes to the robots.txt standard, it is more important than ever to stay updated and optimized for better search engine rankings.